Attention network is the core of Transformers, Vision Transformers, and Large Language Models (LLMs). Understanding the Attention mechanism and the neural architecture of the attention network helps to understand the transformers architecture and the LLMs much better.

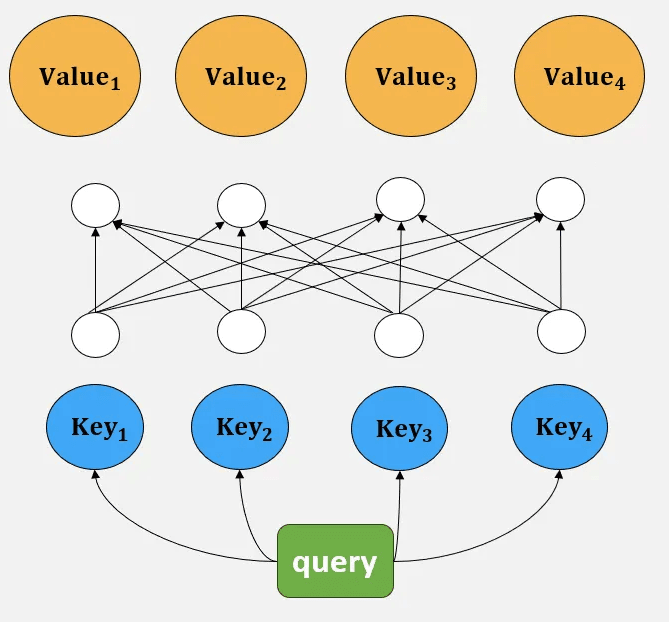

Attention Mecanism is a nueral architecture which resembles the retrieval process of a key-value pair, for example a dictionary, based on some condition (query). For example

However in attention mecanism this type of retrieval is probabilistic and based on the Attention equation:

The attention mecanism is the genralization the retrieval process in a dictionary. To retirieve a value based on some key and a query in a dictionary, the similarity of the query and only one of the keys is 1 and the similarity of the query and the other keys is 0. Therefore for a query and a given key, we find only one value. But in the attention network we retrieve the weighted combination of the values, to be able to do back-propagation.

The Attention values is obtained by computing the similatity of query and the keys and computing the activation values. and then applying the attention function.

The first layer in the attention network is some keys and the computed similarty of a query and each key.

where Sᵢ = f(q, kᵢ) is the similarity function. The most used similarity function are:

Dot Product

2. Scaled Dot Product:

where d is the dimentionality of each key. The advantages of scaled dot product over simple dot product is that it keeps the dot product in a certian scale.

3. General Dot Product

where W is the weight matrix. General dot products projects the query into the key space. It helps to keep the query and the keys in a same space.

4. Additive Similarity

Where q is the query, and W is the weight matrix.

The next layer is the computation of activation function in the form of hidden nodes. We use sᵢ and the summation of all the similarity values for For computing aᵢ. Therefore, a fully connected networks comes along.

where aᵢ is

The attention values computed by addiung up the multiplication of aᵢ and vᵢ (a₁ v₁ + a₂ v₂ + …)